AI-Powered Social Content Creation for Public Officials: VoxiFly Pioneers Ethically-Driven, Responsive Solutions.

We take a middle-road appraoch to AI-assisted generation of content in VoxiFly. Read our ethical take!

8/17/20244 min read

Since its public release in November 2022, the world has become fixated with ChatGPT. It surpassed 100 million users within its first two months of deployment, growing faster than any consumer software released to that point. In a short time, it has had tangible impacts across a striking range of industries. Soon thereafter, Biden’s White House issued an Executive Order on the Safe, Secure and Trustworthy Development and Use of Artificial Intelligence, which includes a section entitled “Reducing the Risks Posed by Synthetic Content” and highlights the urgency of AI governance.

Yet despite what perhaps seems like its sudden, meteoric rise, predecessors of ChatGPT have been around for many years. The generative pre-trained transformer (GPT) architecture was first introduced by OpenAI in 2018 and has been successively updated since then. Moreover, ChatGPT follows in a long line of conversational agents dating back as far as Eliza in 1965, with Amazon’s Alexa in 2011 representing another important landmark.

What is different this time? Many in research and tech have recognized the public release of ChatGPT (and this family of technologies) as a tipping point for AI. They have noted the key difference between ChatGPT and prior AI is its human-level performance across a wide variety of tasks. ChatGPT has scored in the 90th percentile on the Uniform Bar Exam (MBE+MEE+MPT), including essay-writing components. It also scored in the 80th percentile or above on the LSAT, SAT, and a wide variety of AP Exams.

To be clear, we have seen many impressive achievements of AI meeting or exceeding human performance in specialized arenas before. IBM’s Deep Blue, for example, defeated the world’s greatest chess player at the time, Gary Kasparov, in 1997. Today’s chess engines have ratings far beyond the best human players in the world. Likewise, IBM’s Watson fared impressively against Jeopardy champions in 201. But although Watson’s breadth of knowledge was extensive, Watson was asked to perform just one task—answer a structured, clearly-defined question with a factual answer pulled directly from source materials. The breadth and flexibility we are seeing in ChatGPT for both structured and unstructured tasks is truly novel and lends it a human-likeness.

In fact, the human-likeness of generative AI has sparked widespread concern. Out of context, it can be impossible to decipher whether text generated by ChatGPT was generated by a human or the software. This means students can use ChatGPT to write their assignments. It also means that ChatGPT can impersonate real users on social media to elicit personal information, spread disinformation, and otherwise influence discourse.

ChatGPT is stunningly creative. A recent study ranked ChatGPT in the 99th percentile for originality and the 93rd percentile for flexibility based on assessments using the Torrance Tests of Creative Thinking, a benchmark measure of human creativity. ChatGPT can write poetry, prose, and dialogue meeting all manners of stylistic and topical input constraints.

Also like its human counterparts, ChatGPT makes mistakes. Its imperfections are humanizing, even relatable. When ChatGPT does not know the answer to a question, it may just make something up. Or, it may say “I don’t know”. Even its technological overlords cannot predict outputs with certainty. The stochasticity and unpredictability of ChatGPT is another cause for concern. How can we assure ourselves that our AI will not learn something or say something harmful and unforeseen? Without knowing that, how can we trust our AI with meaningful decisions? Developers are working to implement guardrails in the system, but these are typically “hard-coded” rather than inherent and responsive to rather than preemptive of egregious malicious behavior.

Against this backdrop, the past year has borne unprecedented discussion in research, policy and mainstream communities asking deep and consequential questions about when (even whether) we should use this technology, for what purposes, and who should decide (so-called governance)?

Well-intentioned, well-informed and values-aligned individuals can readily disagree about the answers to these questions. That is because, we suggest, the answers to these questions require envisioning and weighing possible benefits and harms of generative AI technologies – an exceptionally difficult task. These (evolving) technologies will be embedded within complex sociotechnical systems, asymmetric power structures, and so forth. They can be used to exacerbate existing biases and further marginalize vulnerable groups, or they can be used to help level the playing field and lift us all. They will have massive economic impact and define the next global order.

In addition to the “big ticket” questions (economic, political), there are some quiet questions we are now being asked about how we want to interact with one another as human beings in this world. (Or maybe these are really the big ticket questions?) Consider this simple example. During this summer’s Paris Olympics, Google ran a TV advertisement showing its AI chatbot Gemini drafting a fan letter for a young girl to send to her favorite athlete. The ad met with immediate backlash, as viewers wondered why anyone would want to replace a child’s words with computer-generated text.

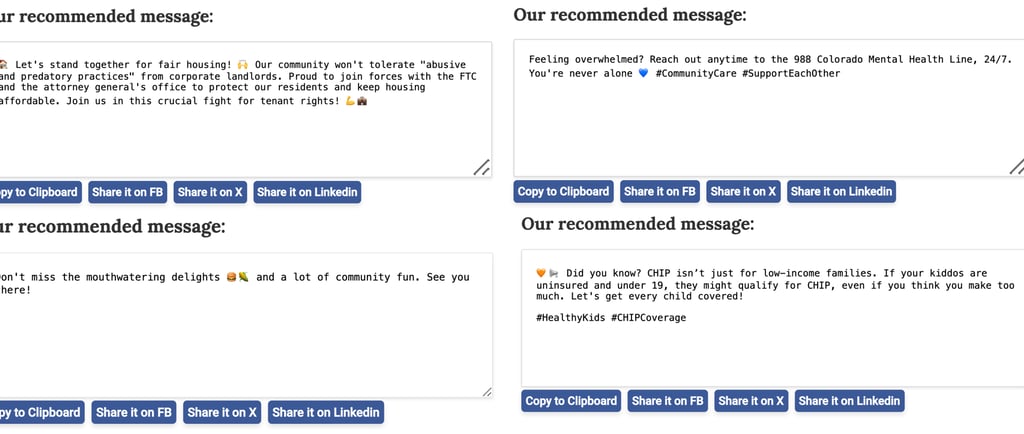

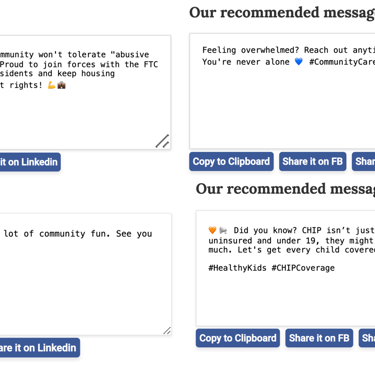

Operating in the world at this moment invites us to cautiously navigate a middle road. Having deep expertise in AI, we are well-versed in its capabilities and limitations. The technologies underlying VoxiFly, our app to assist public officials with posting on social media, includes both classical machine learning and generative AI (ChatGPT). These are, however, not deployed as “black boxes” nor as solutions in and of themselves. They play circumscribed and well-defined roles in our pipeline, but the pipeline is a human-in-the-loop system.

Moreover, we are actively engaged in development, deployment and evaluation of our technologies according to industry best practices as defined by the NIST framework AI Risk 1.0. This is a rapidly evolving domain, so subscribe and stay tuned for more on everything we’re doing to monitor and assure ethics and safety.