Don’t believe your eyes?

AI doesn’t just create fakes — it creates doubt. And that doubt is reshaping the relationship between leaders and the people they serve.

9/3/20252 min read

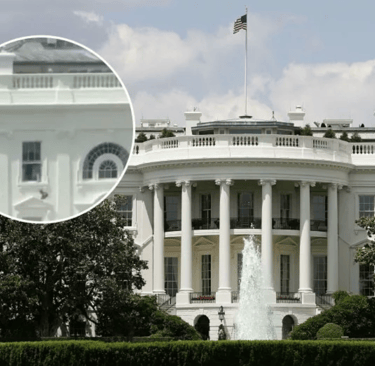

Many of us have now seen the viral video circulating on social media. It shows a person wearing white pants stepping a foot out onto a windowsill on the second floor of the White House and tossing down what appears to be a black plastic bag. Later someone tosses something long and white out the window. The video was first shared by the Instagram account Washingtonian Problems (@washingtonianprobs) over Labor Day Weekend.

(Note: if you haven’t seen it, this link should take you there… Instagram video)

Of course, social media is full of colorful speculation. What is in the bag? Is it…the Epstein files? Laundry? Is Melania throwing him out?

A White House aide told reporters that the video shows a contractor doing regular maintenance. Perhaps to the Lincoln Bedroom and Bathroom, which is due for renovation. But when asked about it, President Trump suggested the video was “probably AI-generated.”

Is that video AI-generated? Maybe. As a researcher who works on generative AI and has studied deepfakes, my hunch is that the video is not AI-generated. But I suppose it could be.

And therein lies the problem.

There is no single, fully accurate tool that can identify AI-generated text, images, and videos. Some tools exist, but none of them are perfect (or even near-perfect).

On the one hand, we are rightfully concerned that synthetic (fake) content circulating online will be interpreted as true. Synthetic content can be created and used for manipulation, coercion, and all kinds of nefarious purposes. This problem is now widely discussed.

But the White House video and Trump’s reaction also highlight the dual of this problem: real content can just as easily be accused of being synthetic.

That uncertainty is what makes generative AI so destabilizing. If anything can be fake, then everything is up for debate. People don’t just question a viral clip of a bag tossed from a White House window — they start to question press releases, news articles, official statements, even live video. The result is an erosion of trust in the very channels that are supposed to anchor public life.

This erosion is especially dangerous for elected officials. Their ability to communicate depends on trust — the idea that when they say something on the record, their words and images carry weight.

Problematically, AI-driven chaos feeds a deeper human impulse: we’re all tempted to dismiss what doesn’t fit our worldview and embrace what does. When facts themselves feel malleable, people gravitate toward confirmation bias, treating “truth” as a matter of preference. That is the ultimate risk of the AI information age — not just more fakes, but a public square where consensus reality splinters.

That’s why local leaders matter more than ever. In this AI age, people still trust the voices they know — the mayor who shows up at the town hall, the council member who returns calls, the school board member who answers questions face-to-face. These relationships cut through the digital fog because they’re rooted in lived, shared experience.

For elected officials at every level, but especially at the local level, the challenge is not just to communicate clearly — it’s to build and reinforce those tangible relationships that remind people what trust looks like in practice. As generative AI blurs the line between truth and fiction, authentic connection with constituents becomes the most reliable safeguard.