What GovFeeds Data Reveals About Engagement, Emotion, and Content Type

Not all social media engagement is created equal. Emotion and post type shape results, and measuring the right outcome matters more than chasing likes.

1/13/20263 min read

Why "likes" don't tell the whole story

Engagement is one of the most cited—and most misunderstood—metrics in government social media.

Likes, shares, and comments are easy to count, which is probably why we rely on them so much. But here's the problem: They don't mean the same thing across different kinds of posts. A service alert with few reactions may be doing exactly what it's supposed to do. A celebratory post with hundreds of likes might not be advancing public understanding or participation at all.

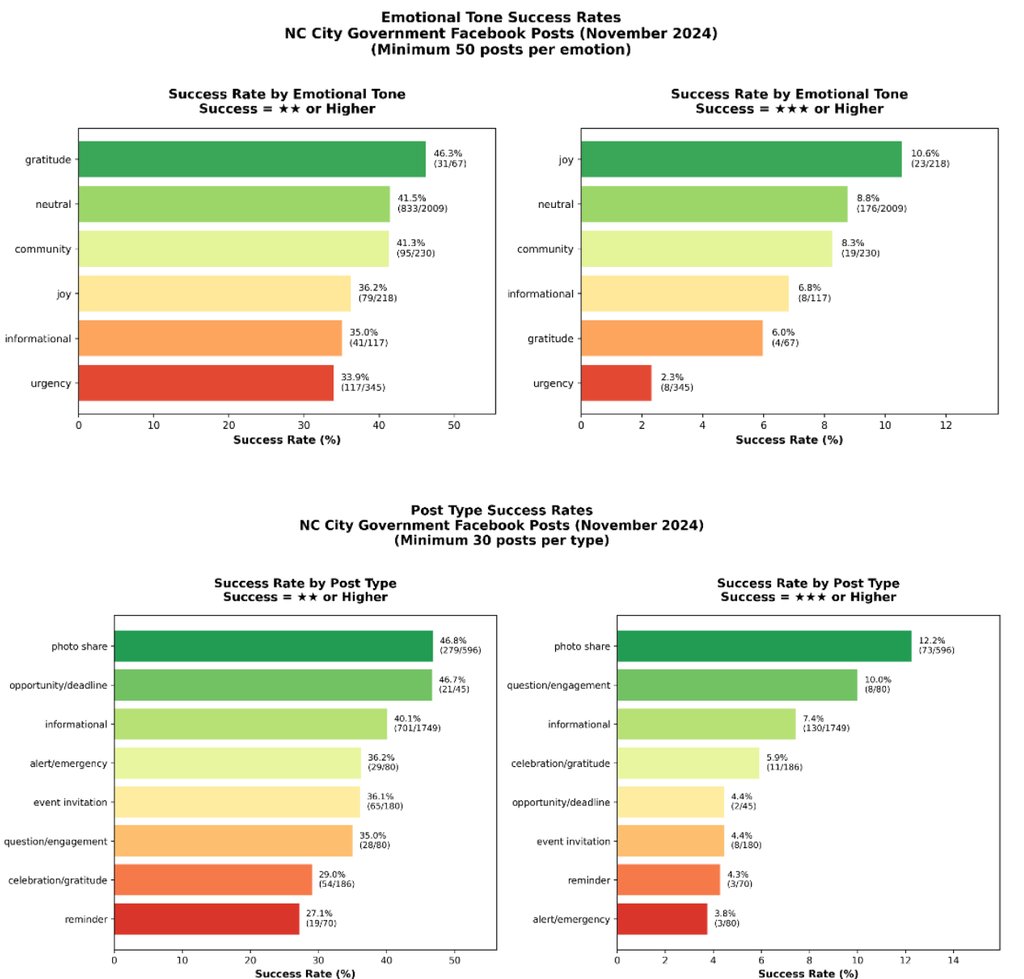

To unpack these dynamics, we analyzed Facebook posts from North Carolina city Facebook pages (from the month of November 2025, in particular) using GovFeeds, focusing specifically on how emotional tone and post type relate to engagement outcomes.

Emotion shapes interaction—not just attention

One of the clearest patterns in the data: joyful posts are far more likely to achieve high engagement than urgent posts.

Joyful posts—often highlighting community events, achievements, or shared identity—had the highest rates of strong engagement in our analysis. Urgent posts, by contrast, were widely seen but rarely interacted with in the form of likes, shares, or comments.

This distinction matters. Research shows that emotional tone influences not just whether people notice content, but how they process and respond to it.

But here's where things get interesting: GovFeeds helps make visible what traditional analytics obscure. It separates reach from interaction.

When you post a weather alert or service disruption, residents may read it without liking or commenting. That's not a failure—that's the post doing its job. The resident got the information they needed. They didn't need to "engage" to benefit from it.

On the other hand, community-building posts—announcing a local festival, celebrating a high school team, recognizing volunteers—invite people to react and share. The engagement itself becomes part of the value.

Engagement ≠ effectiveness

This leads to a common mistake in social media evaluation: treating engagement volume as a proxy for effectiveness.

Our data reinforces what research has long cautioned: engagement quality matters more than engagement quantity.

Urgent posts are often intentionally non-interactive. Community-building posts, by contrast, invite reaction and conversation. Evaluating both with the same metric obscures their different purposes.

If you're a PIO or communication director getting pressure to "boost engagement," this framework matters. Not all posts should have high engagement. Some should have high reach with low interaction. Others should spark conversation. The metric you use depends entirely on what the post was designed to do.

Post format matters—but context matters more

The analysis also shows that photo-share posts dominate at both moderate and high engagement thresholds.

They're excellent at achieving moderate success (★★ engagement) and the best at breaking through to high engagement (★★★+). This aligns with prior research demonstrating that visuals attract attention and improve comprehension.

But—and this is important—our data adds nuance: Visuals are most effective when paired with clear, concrete information.

A photo of a pothole repair crew is more engaging than a text-only post saying "street maintenance in progress." But a photo without context—no location, no timeline, no explanation—might get attention without delivering value.

Meanwhile, reminder posts and celebration posts tend to struggle at both engagement levels in our North Carolina data. Does that mean they're ineffective? Not necessarily. It means their success should be measured differently. Maybe the metric isn't "likes"—maybe it's whether people showed up to the event or registered for the service.

Matching content to goals

One of the most important contributions GovFeeds makes is enabling teams to analyze posts by intent.

Rather than asking "Which post did best?", you can ask:

What was this post designed to do?

Who was it for?

What kind of engagement, if any, was appropriate?

We support this by allowing categorization by post type and emotional tone, enabling apples-to-apples comparison within meaningful groups.

For instance, if you post about a road closure due to emergency repairs, that's very different from a post about summer concert series registration opening. Both might be important. Both might reach thousands of people. But comparing their "engagement" directly doesn't make sense.

Avoiding the trap of vanity metrics

Commercial platforms reward engagement volume because it drives advertising revenue. Public institutions operate under different incentives—or at least, they should.

Resist the pull of vanity metrics by grounding evaluation in mission-aligned outcomes rather than platform defaults.

Instead of chasing likes, you can ask whether residents:

Found the information they needed

Showed up to participate in civic processes

Felt connected to their community

Received timely, accurate service information

Those outcomes don't always generate likes. But they generate trust.